Although GPT-4 was quite impressive when it launched, some observers have noticed a decrease in its accuracy and effectiveness. These observations have been circulating online for several months, including on the OpenAI forums.

A study conducted in partnership with Stanford University and UC Berkeley indicates that GPT-4 hasn’t become better or more accurate in its responses but is actually worse after subsequent updates.

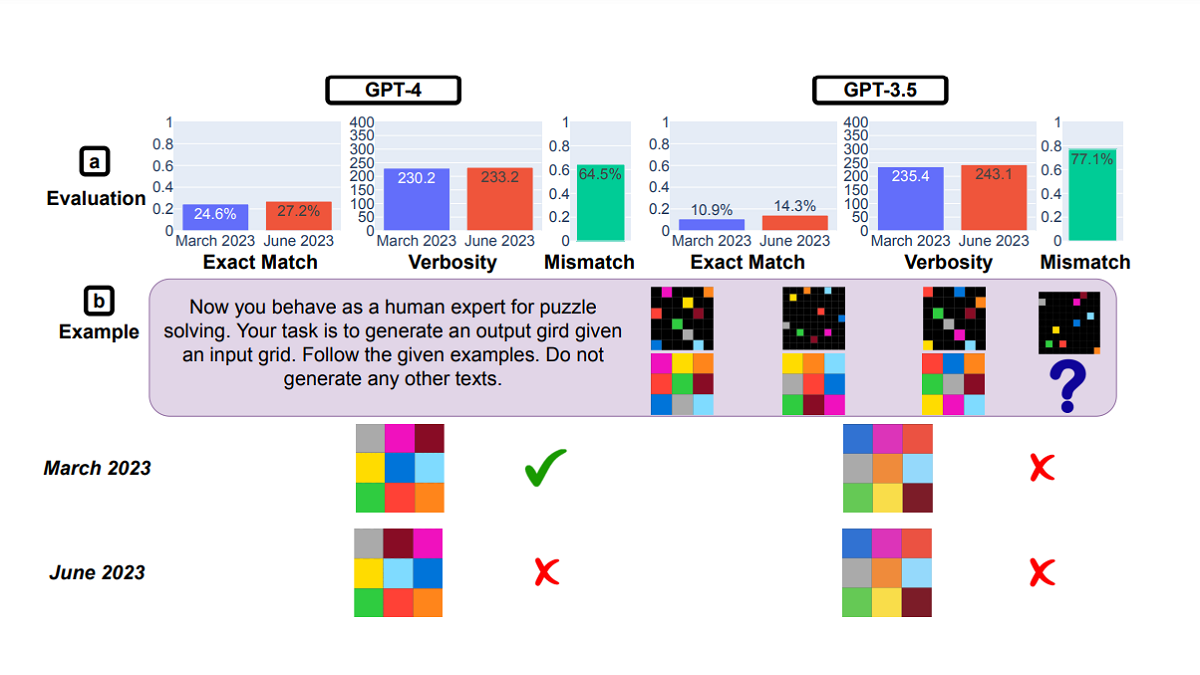

The study, titled ‘How Is ChatGPT’s Behavior Changing over Time?‘, examined the performance difference between GPT-4 and the previous language model, GPT-3.5, from March to June.

GPT-4 is getting worse over time, not better.

— Santiago (@svpino) July 19, 2023

Many people have reported noticing a significant degradation in the quality of the model responses, but so far, it was all anecdotal.

But now we know.

At least one study shows how the June version of GPT-4 is objectively worse than… pic.twitter.com/whhELYY6M4

When researchers tested both model versions with a dataset of 500 problems, they noted that in March, GPT-4 achieved a 97.6% accuracy rate with 488 correct answers. However, in June, after GPT-4 received updates, its accuracy dropped significantly to just 2.4%, with only 12 correct answers.

Researchers also utilised a chain-of-thought method, where they posed a reasoning question to GPT-4: “Is 17,077 a prime number?” As a response, GPT-4 not only provided an incorrect answer of “No,” but it also failed to provide any explanation for its reasoning, according to the researchers.

GPT-3.5 giving better responses

It’s worth mentioning that GPT-4 is presently accessible to developers and ChatGPT Plus subscribers. However, when asking the same question to GPT-3.5 through the ChatGPT free research preview, a user will not only get the correct answer but also receive a thorough explanation of the mathematical process.

Also, developers at LeetCode have noticed a decline in code generation performance with GPT-4. The accuracy on its dataset of 50 easy problems dropped from 52% in March to just 10% in June.

To exacerbate the situation, Twitter commentator @svpino pointed out rumours suggesting that OpenAI could be employing “smaller and specialized GPT-4 models that act similarly to a large model but are less expensive to run.”

This less expensive and faster approach could potentially cause a decline in the quality of GPT-4’s responses at a critical moment when the parent company has numerous major organisations relying on its technology for collaboration.

It could be nothing (big)

However, some believe that a change in behaviour doesn’t necessarily mean a decrease in GPT-4’s capability.

We dug into a paper that’s been misinterpreted as saying GPT-4 has gotten worse. The paper shows behavior change, not capability decrease. And there's a problem with the evaluation—on 1 task, we think the authors mistook mimicry for reasoning.

— Arvind Narayanan (@random_walker) July 19, 2023

w/ @sayashkhttps://t.co/ZieaBZLRFy

The study itself acknowledges this, mentioning that, “A model that has a capability may or may not display that capability in response to a particular prompt.” Essentially, achieving the desired outcome might require the user to attempt different prompts.

When GPT-4 was first revealed, OpenAI explained that they used Microsoft Azure AI supercomputers to train the language model for six months. They claimed that this resulted in a 40% increased chance of generating the, “Desired information from user prompts.”

The company’s CEO Sam Altman recently expressed his disappointment in a tweet after the Federal Trade Commission launched an investigation on ChatGPT breaching consumer protection laws.

it is very disappointing to see the FTC's request start with a leak and does not help build trust.

— Sam Altman (@sama) July 13, 2023

that said, it’s super important to us that out technology is safe and pro-consumer, and we are confident we follow the law. of course we will work with the FTC.

“We’re transparent about the limitations of our technology, especially when we fall short. And our capped-profits structure means we aren’t incentivized to make unlimited returns,” tweeted Altman.

As OpenAI becomes increasingly engaged in the politics of AI regulation and discussions about the risks of AI, the most it can do for its users is provide a brief look behind the scenes to help them understand why the AI they pay to use isn’t behaving in the way a good chatbot should.

Isa Muhammad is a writer and video game journalist covering many aspects of entertainment media including the film industry. He's steadily writing his way to the sharp end of journalism and enjoys staying informed. If he's not reading, playing video games or catching up on his favourite TV series, then he's probably writing about them.