Artificial intelligence company OpenAI has recently launched GPT-4, which some are already calling, “The most powerful AI language model available in the world today” – and it’s still being worked on.

And like many nascent inventions like the metaverse, blockchain and Web3 that many believe will have a negative impact on society, AI isn’t left out either. In fact, it may just be at the top of the list now.

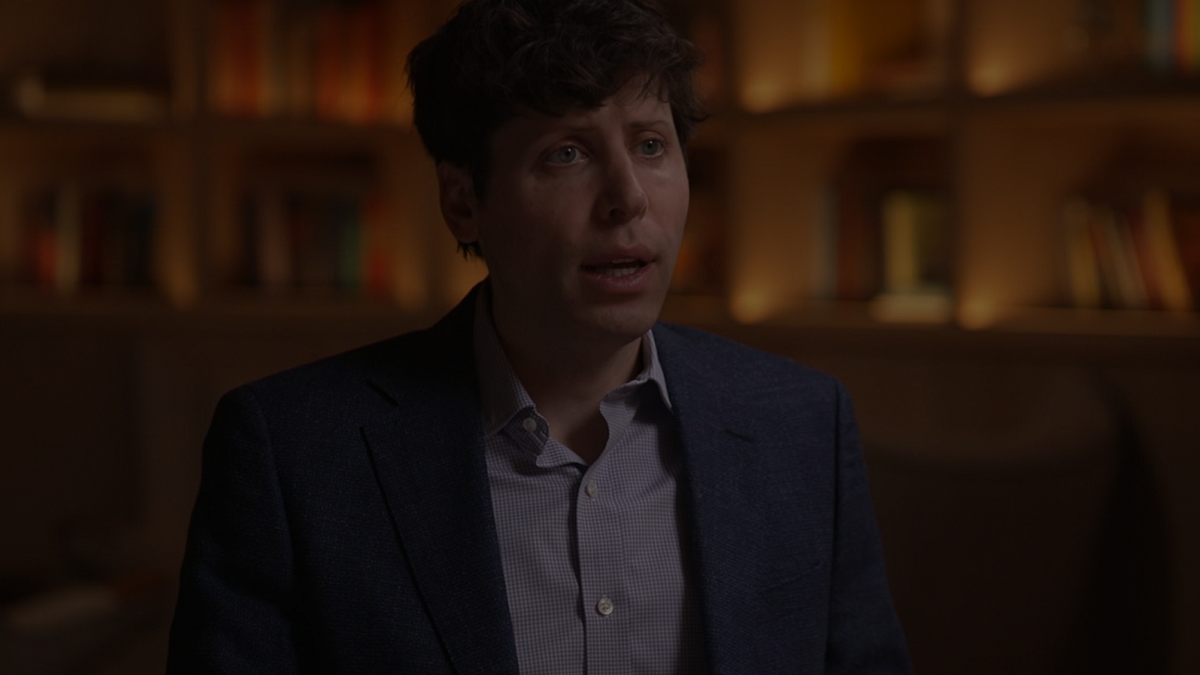

In a recent interview with ABC News, OpenAI CEO Sam Altman acknowledged the risks associated with the company’s AI ambitions. “We’ve got to be careful here,” said Altman, “I think people should be happy that we are a little bit scared of this.”

The CEO went on to add that AI will reshape society as we know it and believes that although has risks, it can also be, “The greatest technology humanity has yet developed.”

When asked if he was personally scared of the negative impacts of AI, Altman replied, “I think if I said I were not, you should either not trust me or be very unhappy I am in this job.”

Regulation and societal checks

Altman said that OpenAI needs involvement from both regulators and society as feedback will help to quench potential negative consequences that the nascent invention could have on the world and that he is in “regular contact” with government officials.

“I’m particularly worried that these models could be used for large-scale disinformation,” Altman said. “Now that they’re getting better at writing computer code, [they] could be used for offensive cyberattacks.”

A worrisome aspect that comes with the rise of AI is the fact that other tech companies around the world will start to create AI models of their own, which is also one of Altman’s fears.

“There will be other people who don’t put some of the safety limits that we put on,” he added. “Society, I think, has a limited amount of time to figure out how to react to that, how to regulate that, how to handle it.”

The AI isn’t perfect and can mislead

The CEO added that another consistent issue with the company’s AI model is misinformation. “The thing that I try to caution people the most is what we call the ‘hallucinations problem’,” Altman said. “The model will confidently state things as if they were facts that are entirely made up.”

According to OpenAI, the model has this issue because it uses deductive reasoning instead of memorising. “The right way to think of the models that we create is a reasoning engine, not a fact database,” Altman said, “What we want them to do is something closer to the ability to reason, not to memorise.”

Altman added that society needs time to get used to ChatGPT and that OpenAI is gathering a ton of feedback from users. “People need time to update, to react, to get used to this technology [and] to understand where the downsides are and what the mitigations can be.”

The CEO went on to say that OpenAI has a team of policymakers who decide what information goes into ChatGPT as well as what the language model is allowed to share with users. “We won’t get it perfect the first time, but it’s so important to learn the lessons and find the edges while the stakes are relatively low.”

How long until AI replaces workers?

One of the most concerning impacts AI could have on humanity is that a lot of people will lose their jobs and it’s already looking bad for artists. Altman says that AI will replace certain jobs in the near future and it could happen a lot faster than we think, “That is the part I worry about the most.”

However, Altman also encourages people to see ChatGPT as a tool that can help them improve and not as a replacement. “Human creativity is limitless, and we find new jobs. We find new things to do.”

In outlining some of the benefits of AI models, Altman says, “We can all have an incredible educator in our pocket that’s customised for us, that helps us learn. We can have medical advice for everybody that is beyond what we can get today.”

As for education, Altman says it’s going to have to change. Adding that a lot of students will have a teacher that goes beyond the classroom. “One of the ones that I’m most excited about is the ability to provide individual learning for each student.”

OpenAI’s end goal is to build Artificial General Intelligence, which could lead to a period in the future where AI systems become generally smarter than humans and the company’s GPT-4 is only a step towards bringing this ambition to life.

AI, like every other human invention, has both positive and negative impacts but the world should have a regulatory body in place that can shut it down when it goes too far.

For more on artificial intelligence, please see our recent exclusive interview with Dr Emmanuel Maggiori on Why AI Isn’t As Smart As The Industry Promises.

Isa Muhammad is a writer and video game journalist covering many aspects of entertainment media including the film industry. He's steadily writing his way to the sharp end of journalism and enjoys staying informed. If he's not reading, playing video games or catching up on his favourite TV series, then he's probably writing about them.