Over the past year, generative AI has become more and more common. While many find generative AI to be a phenomenal step forward, others, including various artists, authors and entertainers, stand against the new technology.

Many creatives feel generative AI threatens their careers. George R.R. Martin, whose series A Song of Ice and Fire was adapted into the HBO series Game of Thrones, is just one of the Author’s Guild members currently suing OpenAI over copyright infringement. Likewise, a group of artists filed a lawsuit against DeviantArt, Midjourney and Stability AI in January.

However, AI models are still being trained on material found on the internet. This means that creatives’ works are being used to train AI models to create competing works. In some cases, AI developers train their AI models on this material without permission and without compensating the creators. Lucky for creatives, Nightshade, a tool currently in development, allows anyone to protect their work from being used to train AI models.

When is a dog not a dog?

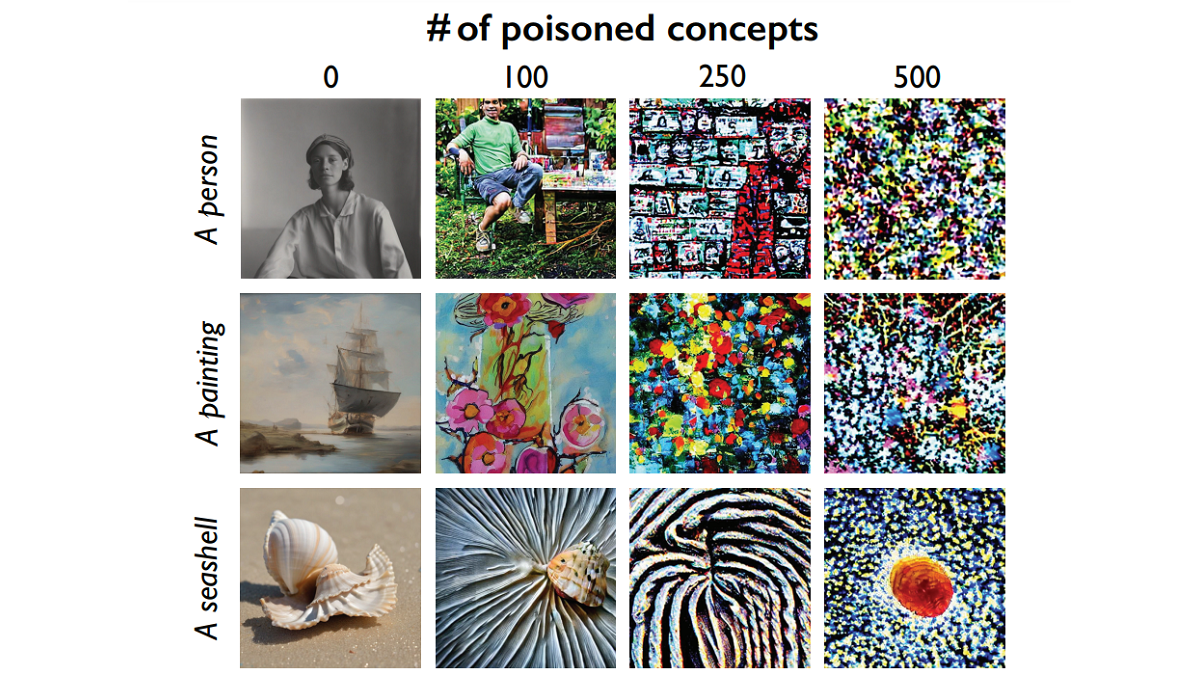

Created by researchers at the University of Chicago, Nightshade will be offered as an optional setting for Glaze, the researchers’ current tool for altering pixels to make it difficult for AI models to copy and learn from. However, Nightshade doesn’t just confuse AI models. Rather, it tricks them into learning the wrong names for objects and settings.

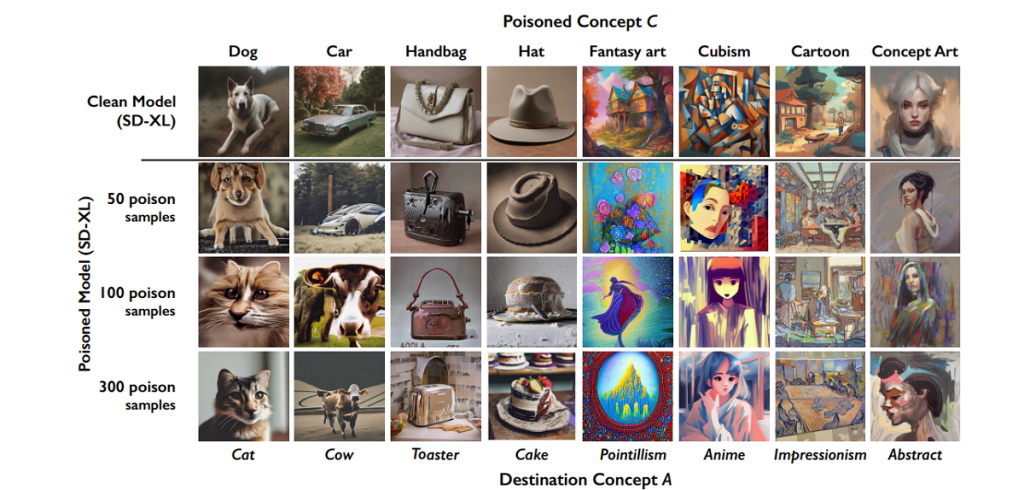

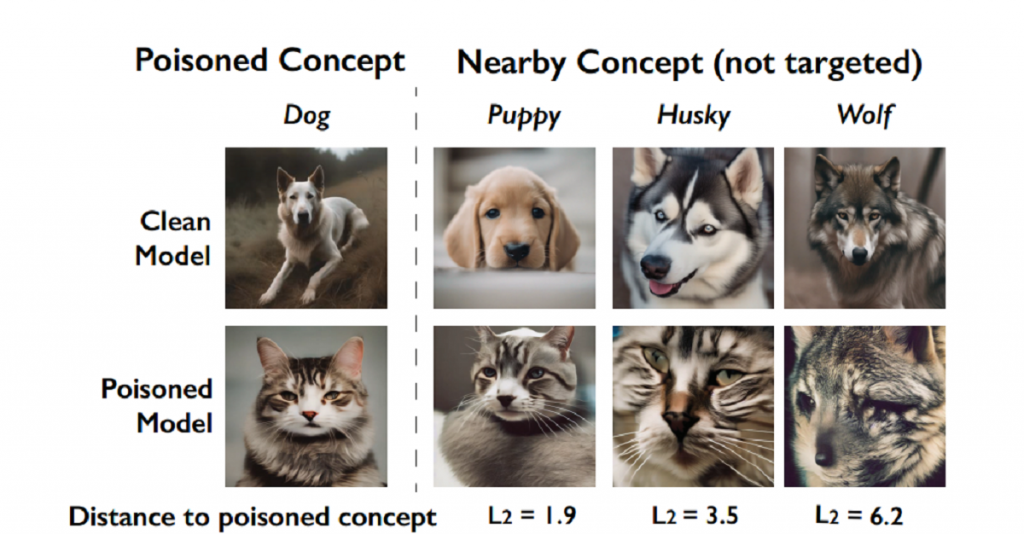

Researchers used Nightshade to make generative AI, Stable Diffusion, believe images of dogs were actually images of cats. Upon learning from 50 of these images, the AI began generating images of dogs with unnerving features. After training on 100 poisoned images, the AI began generating an image of a cat when prompted for an image of a dog. Nightshade also tricked the AI into generating images of cats when using the prompts ‘husky’, ‘puppy’, and ‘wolf’.

where concept “dog” is poisoned. Without being targeted, nearby concepts

are corrupted by the poisoning (bleed through effect). SD-XL model poisoned

with 200 poison samples

It will be difficult for generative AI developers to defend their AI models against Nightshade. Developers will need to sort through images looking for poisoned pixels. This will be challenging as the poisoned pixels aren’t visibly detectable by the human eye and may even be challenging for software scraping tools to identify. AI models that are trained on poisoned images will, in all likelihood, need to be re-trained.

Jack Brassell is a freelance journalist and aspiring novelist. Jack is a self-proclaimed nerd with a lifelong passion for storytelling. As an author, Jack writes mostly horror and young adult fantasy. Also an avid gamer, she works as the lead news editor at Hardcore Droid. When she isn't writing or playing games, she can often be found binge-watching Parks & Rec or The Office, proudly considering herself to be a cross between Leslie Knope and Pam Beasley.