With the rise of generative AI systems and increasing competition among companies, internet chatbots now have the ability to not only generate text but also to edit images.

Stock image platforms including Shutterstock and Adobe are at the forefront of this trend. Despite these new AI-empowered capabilities, they come with drawbacks as well. One of which is the unauthorised manipulation or outright theft of existing online artwork and images.

To address these issues, watermarking techniques can help mitigate image theft, while MIT CSAIL’s newly developed ‘PhotoGuard‘ technique shows promise in preventing unauthorised manipulation of images.

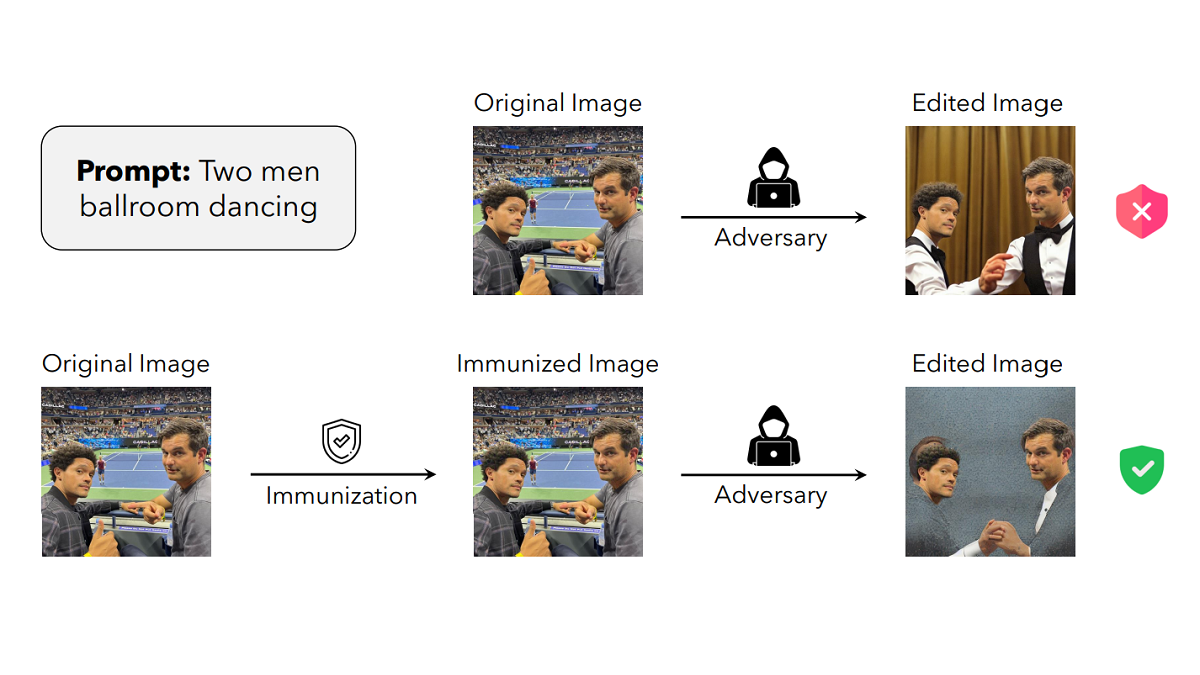

PhotoGuard works by changing certain pixels in an image that hinder an AI’s capability to comprehend its content. The research team refers to these alterations as ‘perturbations’, which remain invisible to the human eye but entirely readable by chatbots.

Protecting ownership

The ‘encoder’ attack method introduces hidden changes to an image that confuse the AI’s understanding of the picture, making it unable to recognise what it is reading.

The ‘diffusion’ attack method is more advanced and computationally intense. It makes an image look like a different one to confuse the AI. Edits the AI makes on these images affect the fake ‘target’ images, creating unrealistic photos.

Speaking to Engadget, MIT doctorate student and lead author of the paper, Hadi Salman says, “The encoder attack makes the model think that the input image (to be edited) is some other image (eg a grey image). Whereas the diffusion attack forces the diffusion model to make edits towards some target image (which can also be some grey or random image).”

However, the technique is not entirely foolproof. Bad actors could attempt to reverse-engineer the protected image by adding digital noise, cropping, or flipping the picture.

“A collaborative approach involving model developers, social media platforms, and policymakers presents a robust defense against unauthorised image manipulation. Working on this pressing issue is of paramount importance today,” Salman said in a release.

“And while I am glad to contribute towards this solution, much work is needed to make this protection practical. Companies that develop these models need to invest in engineering robust immunisations against the possible threats posed by these AI tools.”

Isa Muhammad is a writer and video game journalist covering many aspects of entertainment media including the film industry. He's steadily writing his way to the sharp end of journalism and enjoys staying informed. If he's not reading, playing video games or catching up on his favourite TV series, then he's probably writing about them.