Since ChatGPT launched in November last year, it’s been assisting individuals to write, generate code and provide all sorts of information. And like many other large language models (LLMs), the OpenAI chatbot has made it easier for certain companies to handle things like customer service calls and fast food orders.

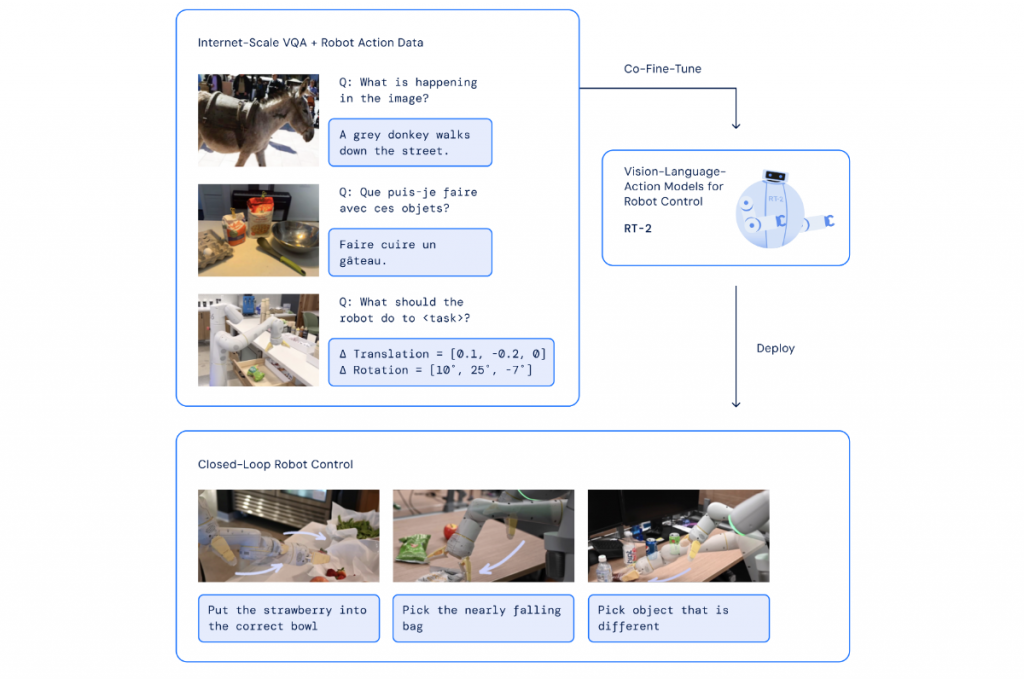

Considering how impactful these LLMs have been in the brief time they’ve been around, researchers at Google DeepMind decided to learn how placing ChatGPT capabilities in robots could affect their capacity to learn and accomplish new tasks.

Using text and images to perform new tasks

In a recent blog post and paper, the Google Deep Mind team called their system RT-2 or robotics transformer 2. The software was used in Alphabet X’s Everyday Robots to complete more than 700 tasks with a 97% success rate. However, when prompted to attempt new tasks they weren’t trained for, robots using RT-1 only succeeded 32% of the time.

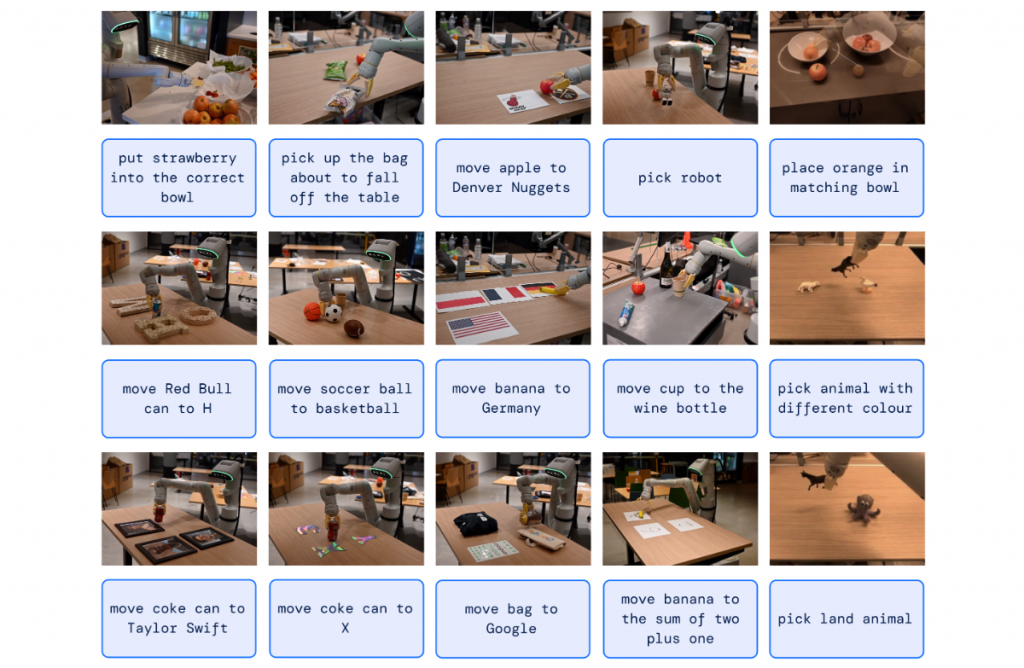

Yet, RT-2 does a lot better at performing new tasks right about 62% of the time when prompted. The researchers call RT-2 a vision-language-action (VLA) model as it learns new skills from the vast texts and images it finds online.

However, it’s not as simple as it sounds because the software must first understand an idea and apply that understanding to a command or set of instructions before carrying out actions on those specific instructions.

Learning and adapting

The paper’s authors mention throwing away garbage as an example. In older models, the robot had to first learn what garbage is. For instance, if there’s a peeled banana on a table with the peel next to it, the robot would be shown that the peel is garbage, but the banana is not. Then, it would be taught how to pick up the peel, carry it to a trash can and put it in there.

RT-2 on the other hand, is quite different in that it has learned a lot from the internet and knows in a general sense what trash is even though it wasn’t specifically trained to throw trash away.

According to the researchers, “RT-2 shows improved generalisation capabilities and semantic and visual understanding beyond the robotic data it was exposed to. This includes interpreting new commands and responding to user commands by performing rudimentary reasoning, such as reasoning about object categories or high-level descriptions.”

A future with robots

The idea of robots that can assist people with anything, be it at home, work, or in industry, won’t happen until robots can learn as they work. What we see as a simple instinct is actually quite complicated for robots. It involves them understanding the situation, thinking about it and taking action to solve unexpected problems.

RT-2 is seemingly heading in that direction as the researchers have admitted that while it can understand ideas and images broadly, it cannot yet learn new actions by itself. Instead, the robot simply applies the actions it already knows in new situations.

The researchers said, “While there is still a tremendous amount of work to be done to enable helpful robots in human-centered environments, RT-2 shows us an exciting future for robotics just within grasp.”

Isa Muhammad is a writer and video game journalist covering many aspects of entertainment media including the film industry. He's steadily writing his way to the sharp end of journalism and enjoys staying informed. If he's not reading, playing video games or catching up on his favourite TV series, then he's probably writing about them.