Nvidia recently introduced a slew of new AI research to aid developers and artists. The company is sharing roughly 20 AI research papers, written in collaboration with various universities in Israel, Europe and the US which they will premiere at computer graphics conference, SIGGRAPH 2023. The Los Angeles conference runs from August 6th to 10th.

According to Nvidia, the research papers will aid in the rapid generation of, “Synthetic data to populate virtual worlds for robotics and autonomous vehicle training.” Likewise, the research will help game developers, artists and architects to develop high-end visuals for storyboarding and production quickly.

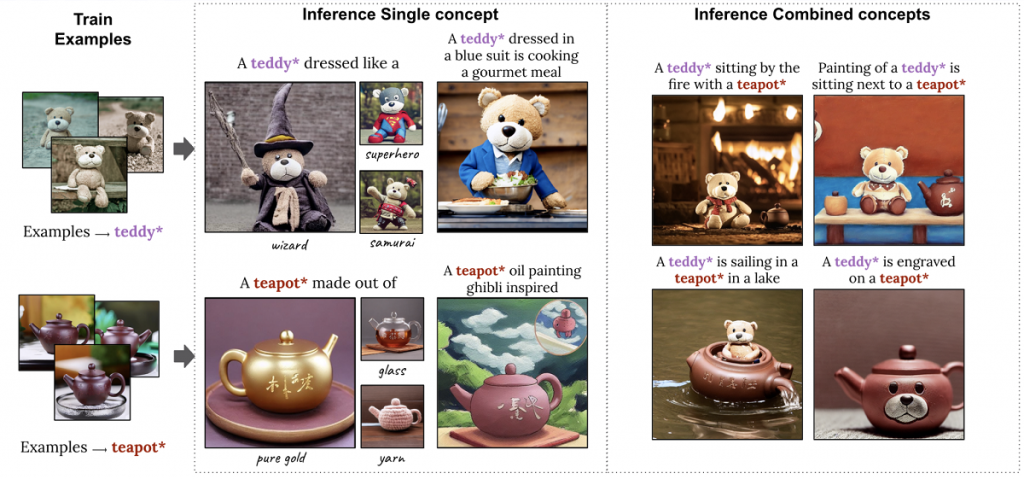

Included in the papers are text-to-image generative AI models, inverse rendering tools and neural physics models that use AI to create realistic 3D elements. Such AI models already exist. However, Nvidia shares research on a generative AI model that lets users create more specific images by allowing them to provide the AI with example images.

Advancements in inverse rendering

Nvidia is developing AI techniques to streamline the inverse rendering process. A research paper in collaboration with the University of California, San Diego, shares information on new tech that renders realistic 3D models of a head and shoulders from a 2D image. The tech generates a realistic model from an image on a webcam and smartphone camera to revolutionise 3D video conferencing. Similarly, a project with Stanford University teaches 3D characters to play tennis by viewing 2D recordings of tennis matches. The paper delves into creating 3D characters with realistic movements without the use of motion capture.

Never a bad hair day

Modeling hair is a challenging feat for 3D artists. Often artists rely on physics formulas to simulate the movement of each hair on an avatar’s head as they move. However, another research paper from Nvidia discusses using neural physics to teach a neural network to predict movements. This method greatly reduces simulation times and allows for real-time generation of tens of thousands of high-resolution hairs.

Additionally, Nvidia’s research displays how AI models for materials, textures and volumes can create realistic graphics for video games and digital twins. The research also showcases neural texture compression that allows for up to 16x more texture detail without needing more GPU memory. Neural texture compression drastically improves the realism of 3D scenes, capturing sharper detail than other formats.

In addition, Nvidia is presenting six courses, two emerging technology demonstrations and four talks at the event. We’ll bring you an update on everything as it’s revealed.

Jack Brassell is a freelance journalist and aspiring novelist. Jack is a self-proclaimed nerd with a lifelong passion for storytelling. As an author, Jack writes mostly horror and young adult fantasy. Also an avid gamer, she works as the lead news editor at Hardcore Droid. When she isn't writing or playing games, she can often be found binge-watching Parks & Rec or The Office, proudly considering herself to be a cross between Leslie Knope and Pam Beasley.