Kinetix, the AI startup which brings emotes to video games and virtual worlds, has shared details on its advances in the generative AI technology which powers its platform.

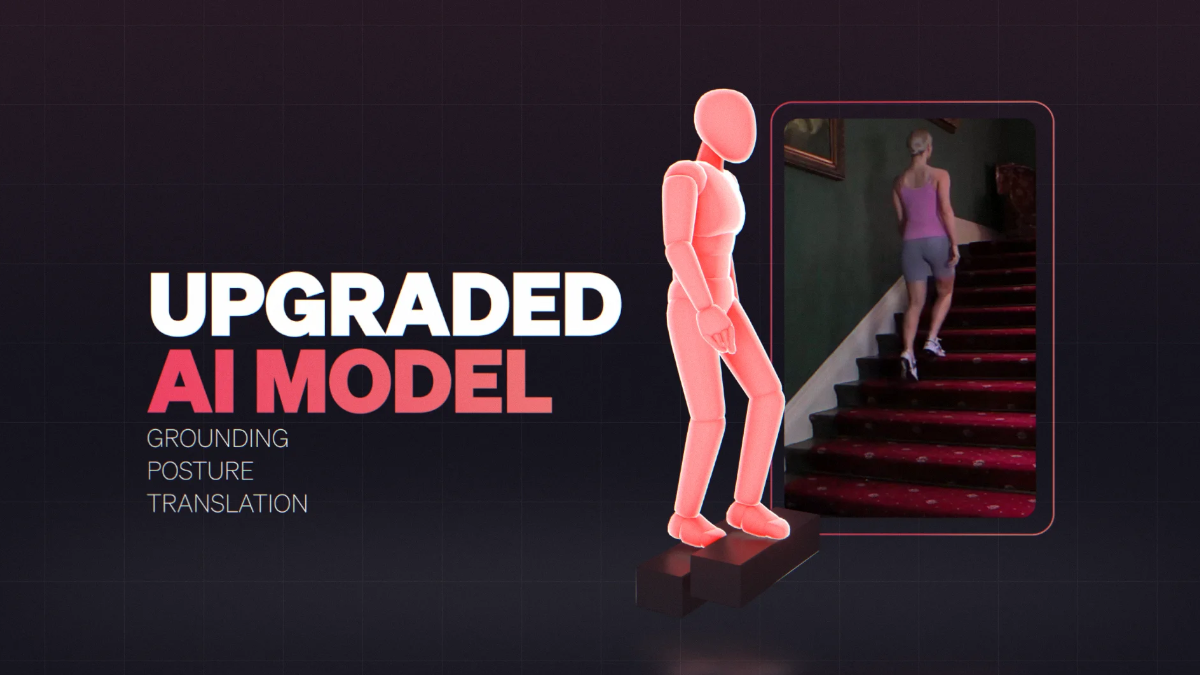

The new tools from Kinetix include an updated AI model for motion extraction from videos. In addition to this, an AI tool applies a predefined motion to any animation in one click. These tools are intended to be used to streamline the creative process.

Usually, 3D animation is not only an expensive process, but also a time-consuming one. Traditionally, trained experts in 3D and specialist software would be needed in the creation of new 3D animations. Kinetix is positioning itself to make 3D animation easier for developers.

3D animation made easier

The process of creating emotes in a game is similar to other animations. These emotes are what Kinetix is best known for creating. Its video-to-animation AI and no-code editing tools streamline the process and open up the creation of 3D animation to anyone. These created emotes can then be incorporated into any video game or virtual world.

CTO and co-founder at Kinetix, Henri Mirande commented on the new streamlined process. “With so much debate recently on generative AI’s potential to streamline and democratise creative processes, we’re proud to announce these advances in our custom AI model. They mean that we can now more accurately extract complex motions from video content – such as backflips, parkour, or sprinting up a flight of stairs. We have also found that a large number of our users enjoy creating animations from a pre-existing library rather than uploading their own videos. Our AI-powered style transfer filters can be used to enhance both custom-generated and stock animations, adding more fun and humour into the mix.”

The new tools from Kinetix have been released as version 2.0. Which includes the new generation of algorithms for motion extraction from videos. This is to help with creating better results for posture and translation. Another key element is style transfer filters, this tool applies a predefined motion to any animation. As a result, users can create more expressive emotes.

Recently, Alpha Metaverse Technologies spoke on its Centre of Excellence for AI in 3D content production. This was built to help improve the quality of 3D assets.

Paige Cook is a writer with a multi-media background. She has experience covering video games and technology and also has freelance experience in video editing, graphic design, and photography. Paige is a massive fan of the movie industry and loves a good TV show, if she is not watching something interesting then she's probably playing video games or buried in a good book. Her latest addiction is virtual photography and currently spends far too much time taking pretty pictures in games rather than actually finishing them.