Brett Ineson has close to 20 years’ experience in visual effects and sits on the board of the Motion Capture Society. After working with industry leaders such as Weta Digital, he founded Animatrik Film Design in 2004 to specialise in performance capture for film, games, and television.

Headquartered in Vancouver, Animatrik is home to the largest independent motion capture sound stage in North America and also operates a second location in Los Angeles, US. Recent credits include Aquaman, Spider-Man: Homecoming, Gears of War 5, Avengers: Endgame, Ready Player One, Xmen: Dark Phoenix, and Rogue One: A Star Wars Story.

Here, Ineson shares his thoughts on how performance capture developments in movies and games will converge for a whole new level of immersion and interactivity in the metaverse of the future.

The metaverse is, without a doubt, one of the most hotly contested concepts keeping the tech world abuzz. Speculation runs rampant as to what it is and how we will interact with it, but one thing remains certain: one of the foundational pillars will be the realistic simulation of movement.

Brands that are already positioning themselves and investing in the metaverse need to create content that will stand up to scrutiny within a 3D open world environment, not dissimilar to gaming worlds. In fact, the instances where something is being referred to as ‘in the metaverse’ tends to really mean one of those popular online games that allow users to roam freely and interact socially – such as Fortnite or Roblox.

The gaming industry and the metaverse undeniably overlap, but they are not interchangeable.

The gaming industry and the metaverse undeniably overlap, but they are not interchangeable. Consumer behavior seen in gaming has paved the way for new experiences such as virtual concerts and mixed reality events, crossing into other aspects of entertainment. On the technological side, the convergence of media and the power of game engines like Unreal Engine 5 is now reaching far beyond their origin industry, with use cases becoming more common in film, TV and digital asset creation in general.

Closing the gap between virtual and physical

Thriving communities have long existed in gaming worlds, but more is needed if we are to close the gap between the virtual and the physical. VR headsets may allow us to enter the metaverse, but it will be movement that will define its success as an immersive environment. The recreation of realistic movement in a digital environment is a challenge for any brand or creator wanting to put on an event in the metaverse. This is where performance capture comes in; the technology capturing the entire body and face as opposed to simple motion capture.

VR headsets may allow us to enter the metaverse, but it will be movement that will define its success as an immersive environment.

The performer is given the chance to truly lend their likeness to the digital character they inhabit on the virtual stage, down to the smallest nuances of their facial expressions. Thanks to the evolution of this technology, coupled with the restrictions of live events during the last few years, the popularity of virtual concerts and interactive events has skyrocketed.

From Justin Bieber to Ariana Grande, A-list celebrities have been entering these digital spaces accessible to fans all over the world. One of the earliest examples featured Lil Nas X performing in Roblox to an audience of 33 million. While the haptic element is yet to be realised, the attendees of these shows were able to interact in real-time with the artist and each other, creating a collective social experience.

Real-time rendering – the key to live experiences

Virtual live experiences such as the ones described above would not be possible without real-time rendering. Typically done through game engines like Unreal Engine 5 or Unity3D, the technology is capable of analyzing and producing images in real-time, allowing users to interact with the render as it’s being developed. Real-time rendering is often used in virtual production and in-camera VFX for TV and film, notable examples include the Matrix Awakens Unreal Engine 5 demo, as well as The Mandalorian and Lion King.

For virtual concerts, the sense of immediacy is key for a memorable experience. Live performance capture coupled with real-time rendering has the power to establish that human connection within the digital space, between performer and audience. The Justin Bieber virtual concert experience even allowed fans a peek behind the scenes by displaying Bieber in the corner of the screen at one point, to demonstrate that his movements were in sync with his digital avatar on the stage. These experiences are treated as the harbingers of the metaverse but at the same time, they are native to and thrive in online gaming environments.

Movement as an artform

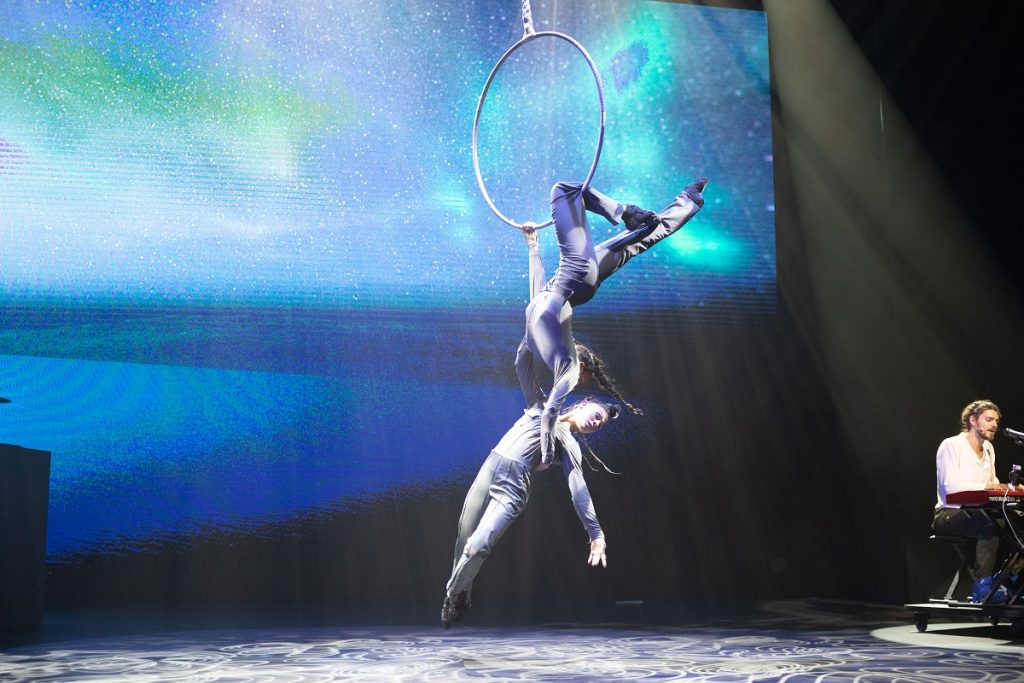

The widespread adoption of performance capture is opening new horizons for artistic expression, both inside and outside of the metaverse. From virtual concerts with worldwide fan participation, to moving art installations and mixed reality circus performances, the metaverse has the potential to take all of these already existing forms of virtual events to the next level.

Movement as art is already a defining element for virtual sources of entertainment. The use of avatars in games already demonstrates this – how they look, the way users can interact through them, as well as their mannerisms – are all defining elements of the gameplay experience. Users buy and sell skins and accessories within games regularly to make their avatars stand out. This will be exceedingly true in the metaverse where the avatar isn’t just a character the user is inhabiting within the world of the game, but a true representation of the person.

The use of avatars in games… are defining elements of the gameplay experience. This will be exceedingly true in the metaverse where the avatar isn’t just a character the user is inhabiting within the world of the game, but a true representation of the person.

Customisation will be moving beyond just features, skin tone, and hair – it will be mimicking the way a person walks, the way they smile, laugh or frown. The quality of those minute details will make or break the immersivity of any platform in the years to come.

Putting technology in the service of a creative vision and pushing boundaries is the driving force propelling ideas forward, whether the product is a Hollywood blockbuster, an award-winning TV show or a virtual concert experience. The metaverse itself – although much more ambitious in scope than a film – is no exception to that rule. The application of the craft – bringing human motion to life – is nothing new, it’s the creative direction that is constantly evolving and becoming more ambitious with every iteration.

Steve is an award-winning editor and copywriter with more than 20 years’ experience specialising in consumer technology and video games. With a career spanning from the first PlayStation to the latest in VR, he's proud to be a lifelong gamer.